AI Web Scraper - Powered by Crawl4AI

Pricing

$8.00 / 1,000 results

AI Web Scraper - Powered by Crawl4AI

A blazing-fast AI web scraper powered by Crawl4AI. Perfect for LLMs, AI agents, AI automation, model training, sentiment analysis, and content generation. Supports deep crawling, multiple extraction strategies and flexible output (Markdown/JSON). Seamlessly integrates with Make.com, n8n, and Zapier.

1.0 (1)

Pricing

$8.00 / 1,000 results

6

Total users

178

Monthly users

38

Runs succeeded

>99%

Issues response

1.1 hours

Last modified

3 months ago

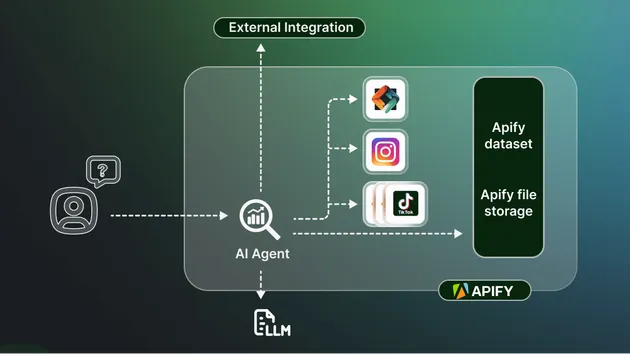

You can access the AI Web Scraper - Powered by Crawl4AI programmatically from your own applications by using the Apify API. You can also choose the language preference from below. To use the Apify API, you’ll need an Apify account and your API token, found in Integrations settings in Apify Console.

1from apify_client import ApifyClient2

3# Initialize the ApifyClient with your Apify API token4# Replace '<YOUR_API_TOKEN>' with your token.5client = ApifyClient("<YOUR_API_TOKEN>")6

7# Prepare the Actor input8run_input = {9 "startUrls": [{ "url": "https://www.cnbc.com/2025/03/12/googles-deepmind-says-it-will-use-ai-models-to-power-physical-robots.html" }],10 "browserConfig": {11 "browser_type": "chromium",12 "headless": True,13 "verbose_logging": False,14 "ignore_https_errors": True,15 "user_agent": "random",16 "proxy": "",17 "viewport_width": 1280,18 "viewport_height": 720,19 "accept_downloads": False,20 "extra_headers": {},21 },22 "crawlerConfig": {23 "cache_mode": "BYPASS",24 "page_timeout": 20000,25 "simulate_user": True,26 "override_navigator": True,27 "magic": True,28 "remove_overlay_elements": True,29 "delay_before_return_html": 0.75,30 "wait_for": "",31 "screenshot": False,32 "pdf": False,33 "enable_rate_limiting": False,34 "memory_threshold_percent": 90,35 "word_count_threshold": 200,36 "css_selector": "",37 "excluded_tags": [],38 "excluded_selector": "",39 "only_text": False,40 "prettify": False,41 "keep_data_attributes": False,42 "remove_forms": False,43 "bypass_cache": False,44 "disable_cache": False,45 "no_cache_read": False,46 "no_cache_write": False,47 "wait_until": "domcontentloaded",48 "wait_for_images": False,49 "check_robots_txt": False,50 "mean_delay": 0.1,51 "max_range": 0.3,52 "js_code": "",53 "js_only": False,54 "ignore_body_visibility": True,55 "scan_full_page": False,56 "scroll_delay": 0.2,57 "process_iframes": False,58 "adjust_viewport_to_content": False,59 "screenshot_wait_for": 0,60 "screenshot_height_threshold": 20000,61 "image_description_min_word_threshold": 50,62 "image_score_threshold": 3,63 "exclude_external_images": False,64 "exclude_social_media_domains": [],65 "exclude_external_links": False,66 "exclude_social_media_links": False,67 "exclude_domains": [],68 "verbose": True,69 "log_console": False,70 "stream": False,71 },72 "deepCrawlConfig": {73 "max_pages": 100,74 "max_depth": 3,75 "include_external": False,76 "score_threshold": 0.5,77 "filter_chain": [],78 "keywords": [79 "crawl",80 "example",81 "async",82 "configuration",83 ],84 "weight": 0.7,85 },86 "markdownConfig": {87 "ignore_links": False,88 "ignore_images": False,89 "escape_html": True,90 "skip_internal_links": False,91 "include_sup_sub": False,92 "citations": False,93 "body_width": 80,94 "fit_markdown": False,95 },96 "contentFilterConfig": {97 "type": "pruning",98 "user_query": "",99 "threshold": 0.45,100 "min_word_threshold": 5,101 "bm25_threshold": 1.2,102 "apply_llm_filter": False,103 "semantic_filter": "",104 "word_count_threshold": 10,105 "sim_threshold": 0.3,106 "max_dist": 0.2,107 "top_k": 3,108 "linkage_method": "ward",109 },110 "userAgentConfig": {111 "user_agent_mode": "random",112 "device_type": "desktop",113 "browser_type": "chrome",114 "num_browsers": 1,115 },116 "llmConfig": {117 "provider": "groq/deepseek-r1-distill-llama-70b",118 "api_token": "",119 "instruction": "Summarize content in clean markdown.",120 "base_url": "",121 "chunk_token_threshold": 2048,122 "apply_chunking": True,123 "input_format": "markdown",124 "temperature": 0.7,125 "max_tokens": 4096,126 },127 "extractionSchema": {128 "name": "Custom Extraction",129 "baseSelector": "div.article",130 "fields": [131 {132 "name": "title",133 "selector": "h1",134 "type": "text",135 },136 {137 "name": "link",138 "selector": "a",139 "type": "attribute",140 "attribute": "href",141 },142 ],143 },144}145

146# Run the Actor and wait for it to finish147run = client.actor("raizen/ai-web-scraper").call(run_input=run_input)148

149# Fetch and print Actor results from the run's dataset (if there are any)150print("💾 Check your data here: https://console.apify.com/storage/datasets/" + run["defaultDatasetId"])151for item in client.dataset(run["defaultDatasetId"]).iterate_items():152 print(item)153

154# 📚 Want to learn more 📖? Go to → https://docs.apify.com/api/client/python/docs/quick-startAI Web Scraper - Crawl4AI for LLMs, AI Agents & Automation API in Python

The Apify API client for Python is the official library that allows you to use AI Web Scraper - Powered by Crawl4AI API in Python, providing convenience functions and automatic retries on errors.

Install the apify-client

$pip install apify-clientOther API clients include: