LinkedIn Jobs & Company Scraper

Pricing

$19.99/month + usage

LinkedIn Jobs & Company Scraper

Actively Maintained - Cheap Rental & Run Cost - LinkedIn Jobs Scraper + Companies - to extract job listings worldwide. Export results for analysis, connect via API, & integrate with other apps. Please note that LinkedIn may block some requests leading their being to fewer results than expected.

1.2 (4)

Pricing

$19.99/month + usage

35

717

90

Issues response

7.6 days

Last modified

8 days ago

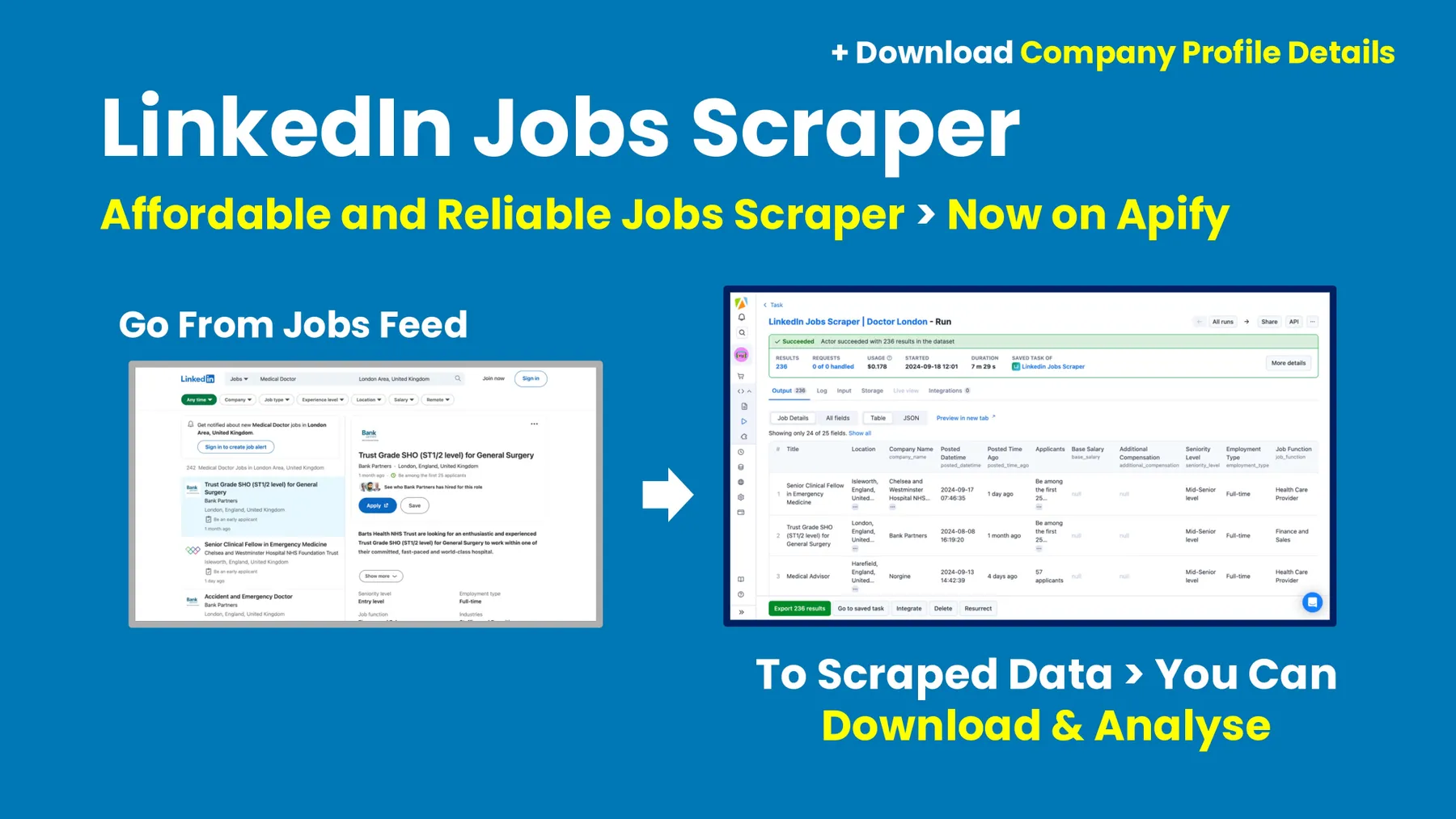

🔥 - LinkedIn Jobs Scraper Apify Actor

The scrapper will do it's best to scrape all the jobs available however LinkedIn may block some requests leading their being to fewer results than expected. Please test the scrapper before subscribing to check if this is acceptable for your usecase.

🕑 - Access the LinkedIn Jobs Scraper on Apify

Quickly pull fresh job listings from LinkedIn and capture company profiles all in one place to speed up your job hunt or streamline recruitment efforts. Use keywords and a full range of filters to narrow your search, and extract crucial details such as job titles, descriptions, company profiles, and recruiter contact information. With this scraper, you’ll have everything you need in one tool, including company logos, profile links, and more—making it ideal for businesses and recruitment agencies. Export the data in formats ready for analysis, or connect seamlessly through API or Python for deeper integration with other tools. This scraper is perfect to find roles and the associated companies worldwide, covering all regions available on LinkedIn, and saving you countless hours of manual searching.

❓ - What is the LinkedIn Jobs Scraper Used For?

The LinkedIn Jobs Scraper simplifies downloading relevant job listings and associated company profiles from LinkedIn. Whether you're a job seeker or a recruitment agency, LinkedIn offers a wealth of opportunities across industries and regions. This scraper not only pulls job listings that match your search criteria but also gathers key details about the companies posting these jobs. With everything in one place—job descriptions, company profiles — you can save hours of manual searching and quickly access the most up-to-date roles and company details.

It’s like having your own personal LinkedIn API for both jobs and companies responsible, all in a single, cost-effective tool.

🏢 - Get Company Profiles Alongside Job Listings

One of the standout features of this LinkedIn Jobs Scraper is the ability to scrape not just job listings but also detailed company profiles, all within the same tool. Now you can pull essential job data alongside comprehensive company information—like company name, logo, profile URL, and more. This is perfect for recruitment agencies or businesses that need a full picture of the companies behind the roles they’re sourcing. Instead of paying for separate scrapers (which can cost $30 for jobs and $20 for company data), you get everything bundled together for just $19.99. It’s a smarter, more affordable solution, providing everything you need in one place for one low monthly cost.

🔨 - How to Use the LinkedIn Jobs Scraper

Using the LinkedIn Jobs Scraper is as straightforward as using the LinkedIn website.

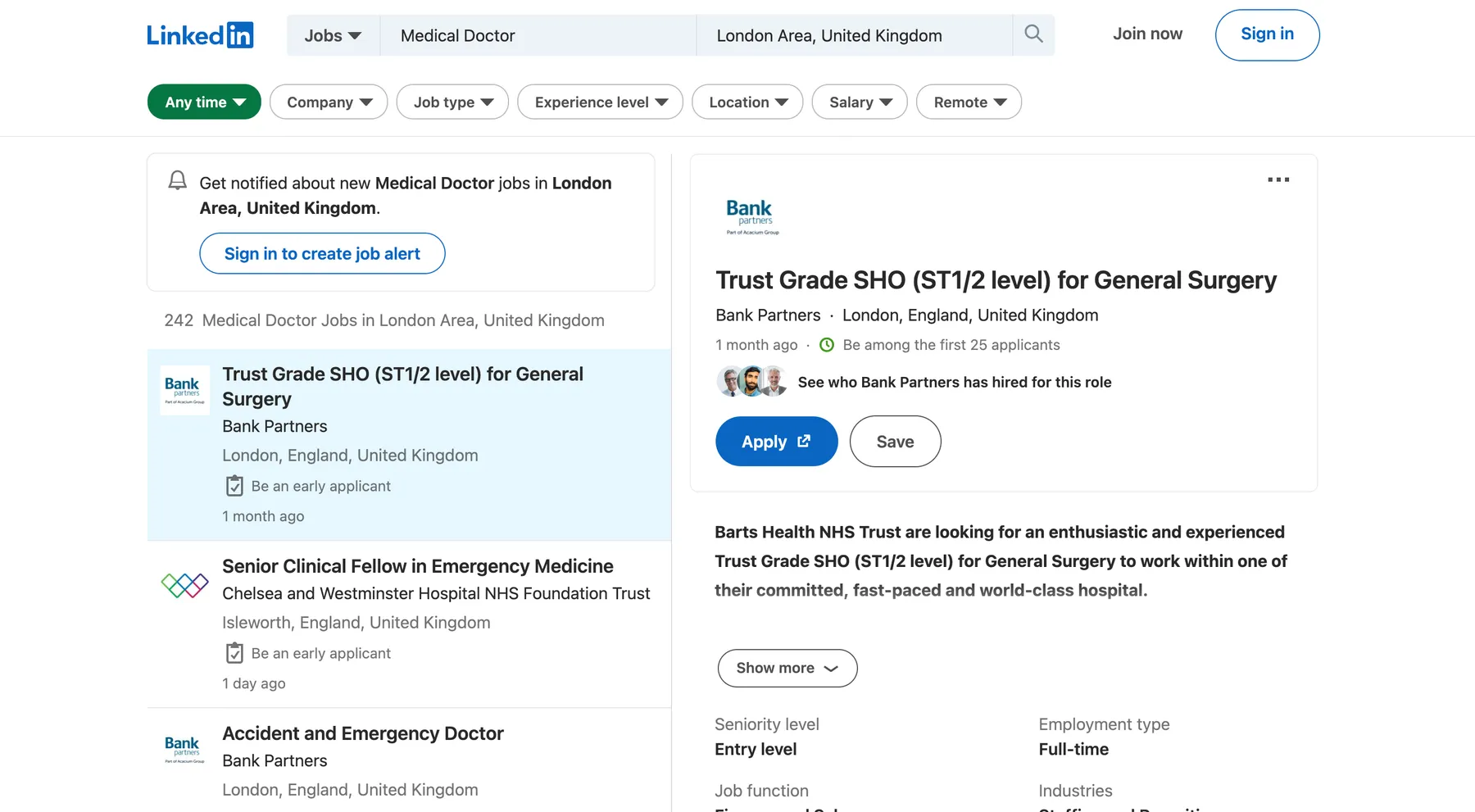

Head over to https://www.linkedin.com/jobs/search using an incognito window (a logged-out session). Next, enter the job title or company you're looking for, along with the geographic location, and click search. You can further refine your results using the filters available in the dropdown on the LinkedIn website, including 'Company', 'Job Type', 'Experience Level', 'Location', 'Salary', and 'Remote' status. The more filters you apply, the more specific your search will be, which generally returns fewer results.

Once you've set the search terms and filters you're looking to scrape results for, simply copy the entire URL. Head back to the LinkedIn Job Scraper on Apify, paste the LinkedIn URL into the "Search URL" field on the input tab, and you're ready to scrape the job results. Simply click "Start" (or "Save & Start").

The actor will return the results from the LinkedIn website, which you can download in JSON, CSV, XML, Excel, HTML Table, RSS, or JSONL formats.

You might find that when applying filters and search terms on a logged-out LinkedIn session, LinkedIn may prompt you to log in to continue. If this happens, simply click the back button twice, and it will take you back to the page you were on, allowing you to continue refining your selection to get the LinkedIn jobs URL you're looking for.

🔢 - How Many Results Can the LinkedIn Scraper Collect?

You can scrape up to 1,000 job listings at a time with the LinkedIn scraper, which should be sufficient for most use cases. If you need to gather more than 1,000 roles, you can break the task into multiple scrapes by adjusting your search criteria using the available filters on the LinkedIn website, and creating multiple tasks on Apify with the different URLs in the Search URL input box. Additionally, you have the option to set a limit on the number of results scraped by using the 'Max Results' feature on the input page.

For example, the scraper takes approximately 3 minutes to return 50 results from LinkedIn.

💰 - What's the Cost of Using the LinkedIn Jobs Scraper?

We offer this LinkedIn Jobs Scraper at a highly competitive monthly rental of just $19.99, making it more affordable than many comparable actors on Apify. Additionally, we've optimized the scraper to minimize the cost of each run, with an average price of just $0.02 per 50 results, which is significantly cheaper than most other LinkedIn scrapers available. This allows you to scrape more job listings for a lower cost per result, saving you time and money. Over a year of use, you could save hundreds of dollars compared to other scrapers on the Apify platform, making this a cheap LinkedIn scraper vs. Octoparse and PhantomBuster. This actor is optimised to work with both datacenter and residential proxies. You'll notice that using datacenter proxies results in significantly lower running costs compared to residential proxies. This is one of the key reasons why this actor is much more cost-effective than many others available on the Apify platform.

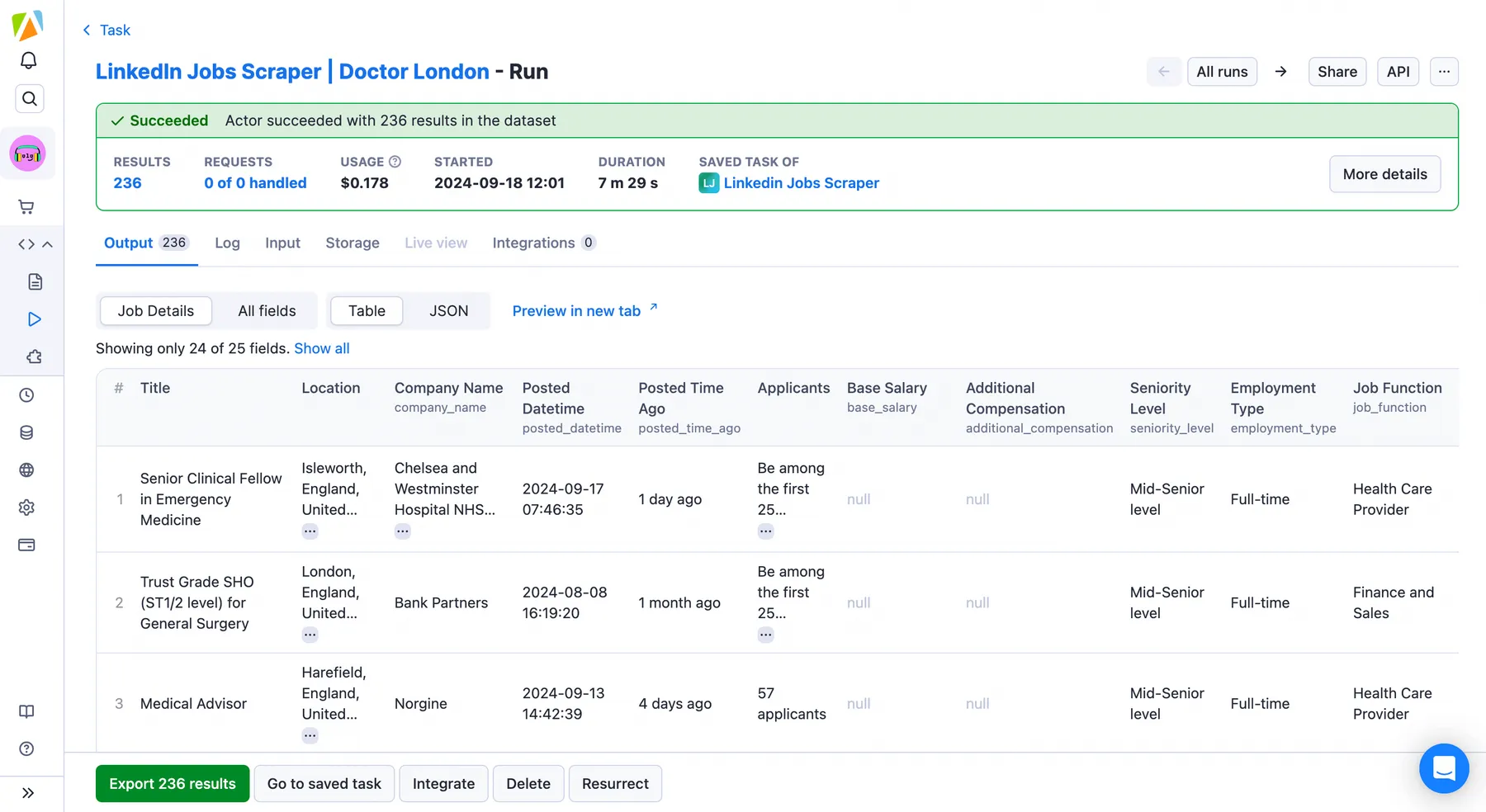

💎 - What Data Can the LinkedIn Jobs Scraper Extract?

The LinkedIn Jobs Scraper can extract all the key information and metadata for each job that matches your filter criteria. Below is an example of the type of data collected, along with a brief explanation of what each field represents:

| Field Name | Field Description |

|---|---|

| title | Specifies the job title, e.g., 'Software Engineer.' |

| location | Shows the geographical location of the job, e.g., 'London, England, United Kingdom' |

| company_name | The name of the company offering the position. |

| posted_datetime | The exact date the job was posted. |

| posted_time_ago | The relative time since the job was posted, e.g., '2 days ago.' |

| applicants | Shows the number of applicants for the role, if available. |

| base_salary | Displays the base salary offered for the role, if provided. |

| additional_compensation | Specifies any additional compensation, such as bonuses or benefits, offered alongside the base salary. |

| seniority_level | The seniority level required for the role, such as 'Entry Level,' 'Mid-Senior level' or 'Internship.' |

| employment_type | The type of employment, such as 'Full-time', 'Contract,' etc. |

| job_function | Specifies the functional area of the job, such as 'Information Technology,' 'Marketing,' or 'Engineering.' |

| industries | Describes the industries relevant to the role, such as 'Staffing and Recruiting,' 'IT Services and IT Consulting,' etc. |

| description_text | The job description in plain text format without HTML formatting. |

| description_html | The job description in HTML format. |

| job_url | The unique URL to the LinkedIn job posting. |

| apply_type | Describes the type of application process, such as 'Easy Apply' or through a third-party website. |

| apply_url | The direct URL to the job application page based on apply_type. |

| recruiter_name | The name of the recruiter responsible for the position. |

| recruiter_detail | The LinkedIn headline for the recruiter responsible for the role, if available. |

| recruiter_image | The profile image URL of the recruiter. |

| recruiter_profile | A link to the LinkedIn profile of the recruiter. |

| company_id | The LinkedIn unique identifier for the company posting the job. |

| company_profile | A link to the LinkedIn profile page of the company. |

| company_logo | The logo image URL for the company posting the role. |

| job_urn | The unique identifier (URN) for the job on LinkedIn. |

| company_website | The official website of the company. |

| company_address_type | The type of address associated with the company, e.g., 'PostalAddress.' |

| company_street | The street address of the company. |

| company_locality | The locality (city) of the company. |

| company_region | The region (state/province) of the company. |

| company_postal_code | The postal code of the company. |

| company_country | The country of the company. |

| company_employees_on_linkedin | The number of employees listed on LinkedIn for the company. |

| company_followers_on_linkedin | The number of followers the company has on LinkedIn. |

| company_cover_image | The URL of the company's cover image on LinkedIn. |

| company_slogan | The slogan or tagline of the company, if available. |

| company_twitter_description | A brief description of the company used for its LinkedIn or Twitter profile. |

| company_about_us | A detailed "About Us" section for the company, including its mission and values. |

| company_industry | The industry in which the company operates, e.g., 'Software Development.' |

| company_size | The size of the company based on employee count, e.g., '11-50 employees.' |

| company_headquarters | The location of the company's headquarters, e.g., 'Delray Beach, Florida.' |

| company_organization_type | The type of organization, e.g., 'Privately Held.' |

| company_founded | The year the company was founded. |

| company_specialties | The company's areas of expertise, e.g., 'Web Development, Mobile Development, React.' |

🪜 - How to Use the LinkedIn Jobs Scraper on Apify

The LinkedIn Jobs Scraper is designed for simplicity. Follow these easy steps to start scraping and download relevant job data:

-

Create a free Apify account.

-

Open the LinkedIn Jobs Scraper (start your 3-day free trial).

-

Copy the URL from LinkedIn for your desired search and paste it in the Search URL input box.

-

Click "Start" and wait for the scraping process to finish.

-

Select your preferred format to export the LinkedIn job data.

Repeat steps 3 to 5 as many times as needed to download all the jobs you're interested in from LinkedIn.

⬇️ - Example Input for the LinkedIn Jobs Scraper

Below is a sample JSON input for the LinkedIn scraper. This input is configured to return all job listings for the term "Medical Doctor" in London. Notice how you only need to copy the URL for your search term on LinkedIn and paste it into the "search_url" input box. You can easily specify filters and other search criteria using LinkedIn's native interface, making it simple to setup and run your search on Apify.

⬆️ - Example Output for the LinkedIn Jobs Scraper

Below are two sample records in JSON format based on the input provided above. Each field is captured directly from the LinkedIn website. You have multiple download options, including JSON, CSV, XML, Excel, RSS, and JSONL. The flat structure of the JSON format ensures easy parsing and integration into your workflows.

💡 - Why Should I Switch to This LinkedIn Scraper?

If you're already using a LinkedIn scraper on Apify, here's why you should consider switching to this one. Our scraper is designed for maximum reliability, ensuring a high success rate with minimal discrepancies in the data collected. It's also cost-efficient to run, offering excellent value while covering all the details you need, as outlined in the example data provided. We offer responsive support, quickly addressing any issues or feature requests to help you stay productive. Plus, new features and improvements are regularly added based on user feedback, making this tool highly adaptable to your needs.

🏢 - LinkedIn Scraper for Recruitment Agencies

For recruitment agencies and staffing firms, this LinkedIn Jobs Scraper for Recruitment is an essential tool. It allows you to efficiently gather comprehensive job data, including job titles, company information, salary ranges, and recruiter contacts, making it easier to stay ahead of the competition. By automating the data collection process with this LinkedIn Jobs Scraper, you’ll save valuable time and ensure you’re always sourcing the best candidates for your clients before your competitors.

This LinkedIn Jobs Scraper is especially useful for analysing market trends over time, enabling recruitment agencies to spot in-demand skills and shifts in hiring patterns. Whether you’re tracking trends across industries or expanding your talent pool globally, the scraper helps you stay ahead of the curve. For agencies working with international clients, this tool allows you to collect job listings from around the world, making it perfect for scaling recruitment efforts. By keeping your candidate database aligned with current market demands, you can ensure you’re delivering the top talent your clients are searching for, all with the help of this LinkedIn Jobs Scraper for Recruitment.

👋 - Support is Available for the LinkedIn Jobs Scraper

Having used many LinkedIn scrapers ourselves, both on the Apify platform and elsewhere, we understand not only how essential the LinkedIn Jobs Scraper is to your workflow but also how critical it is to address any issues promptly when something doesn't look right. If you encounter any problems or have questions, you can quickly raise an issue and expect a fast response. We proactively test this actor to ensure stability, making it reliable and user-friendly. Rest assured, we value your investment and are committed to providing a service that meets your expectations.

📝 - Helpful Notes for the LinkedIn Scraper

When using this scraper, you can expect the number of results to match the LinkedIn website about 90% of the time for a given filter criteria. LinkedIn often includes duplicates or may present roles in ways that can lead to slight discrepancies in the total count. If you notice a significant difference or believe some results are missing, please contact us, and we will promptly investigate. While some factors related to how LinkedIn displays roles are beyond our control, we are committed to ensuring the highest level of accuracy.

👍 - Is it Legal to Scrape the LinkedIn Website?

Our web scrapers operate ethically, ensuring they do not collect private user data such as personal email addresses, gender, or an individual’s location. They only gather information that users have explicitly shared publicly. Additionally, we only allow you to use a logged-out session of LinkedIn, so you're accessing publicly available data. We believe that when used for ethical purposes, our scrapers are a safe tool. However, please note that your results may still contain personal data. Such data is protected under GDPR in the European Union and similar regulations globally. You should avoid scraping personal data unless you have a legitimate reason. If you're uncertain about the legality of your reason, we recommend consulting legal counsel. legal counsel. For more information, you can also read the blog post Apify wrote on the legality of webscraping.

On this page

-

🔥 - LinkedIn Jobs Scraper Apify Actor

- 🕑 - Access the LinkedIn Jobs Scraper on Apify

- ❓ - What is the LinkedIn Jobs Scraper Used For?

- 🏢 - Get Company Profiles Alongside Job Listings

- 🔨 - How to Use the LinkedIn Jobs Scraper

- 🔢 - How Many Results Can the LinkedIn Scraper Collect?

- 💰 - What's the Cost of Using the LinkedIn Jobs Scraper?

- 💎 - What Data Can the LinkedIn Jobs Scraper Extract?

- 🪜 - How to Use the LinkedIn Jobs Scraper on Apify

- ⬇️ - Example Input for the LinkedIn Jobs Scraper

- ⬆️ - Example Output for the LinkedIn Jobs Scraper

- 💡 - Why Should I Switch to This LinkedIn Scraper?

- 🏢 - LinkedIn Scraper for Recruitment Agencies

- 👋 - Support is Available for the LinkedIn Jobs Scraper

- 📝 - Helpful Notes for the LinkedIn Scraper

- 👍 - Is it Legal to Scrape the LinkedIn Website?

Share Actor: