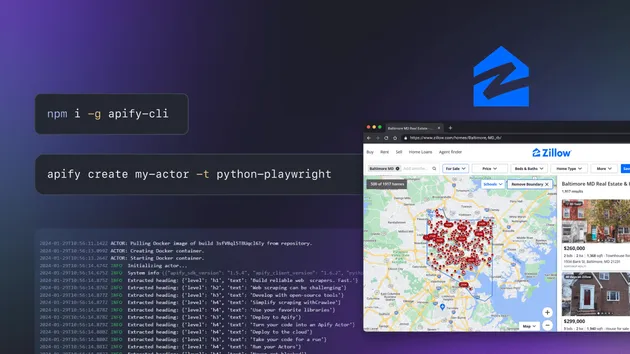

Zillow Detail Scraper

Pay $3.00 for 1,000 results

Zillow Detail Scraper

Pay $3.00 for 1,000 results

Get details of Zillow properties from URLs. This Actor can be easily integrated with other Zillow Scrapers.

Do you want to learn more about this Actor?

Get a demoMissing scrape fields

Closed

Some data fields that I know can be scraped (and are in the example file) are not being pulled. In my use case I need all the foreclosure fields. Only two are pulled currently. There are twenty or so that can be pulled. Can these fields be added to the scrape request?

Hi, could you please share a link to a specific run where you saw these fields missing, so that I could investigate? Thanks in advance

strytsters

Just a few minutes ago I ran "lpS8z64UwJPr8hcib". There is a warning about the number of columns extracted for csv and xlsx and suggests xml or json. I looked at the xml and json download options also - they also do not show the data I am looking for. Below is a snippit from the example output in the information tab, which I know (or assume anyway) has no direct bearing on your designed output but I believe these fields can be added. I also understand that not all listing will have these fields populated but where they are I would like to pull that info. I believe the only field that shows up in the output now is ForeclosureTypes.

"foreclosureDefaultFilingDate": null, "foreclosureAuctionFilingDate": null, "foreclosureLoanDate": null, "foreclosureLoanOriginator": null, "foreclosureLoanAmount": null, "foreclosurePriorSaleDate": null, "foreclosurePriorSaleAmount": null, "foreclosureBalanceReportingDate": null, "foreclosurePastDueBalance": null, "foreclosureUnpaidBalance": null, "foreclosureAuctionTime": null, "foreclosureAuctionDescription": null, "foreclosureAuctionCity": null, "foreclosureAuctionLocation": null, "foreclosureDate": null, "foreclosureAmount": null, "foreclosingBank": null, "foreclosureJudicialType": "Non-Judicial", "datePostedString": "2023-05-24", "foreclosureTypes": { "isBankOwned": false, "isForeclosedNFS": false, "isPreforeclosure": false... [trimmed]

Hi, thanks a lot for this detailed info!

TLDR: we fixed your issue in version 0.0.41 and you can find a run with the full output here

A bit more info: Zillow uses different data formats for houses that are for sale, for rent or not-for-sale (or "recently sold" in their terminology). The houses in your input had status PRE_FORECLOSURE which I haven't noticed before, so it wasn't handled in the crawler and we only downloaded the data available for not-for-sale houses. I added the configuration for this type of real estate properties into the crawler and now it works fine - if you run into more places that seem to be scraped as wrong type, please do not hesitate to reach out again.

Thanks for raising this, reports like yours are very helpful to us in maintaining highest quality scrapers and covering all quirks of the target websites.

Best regards

Matěj

strytsters

The fields I was referring to still do not seem to be loading in xlsx or csv. Is this because of the 2000 column limit? The columns are present in an xml download - and I can convert that to a "spreadsheet" based format in Excel. However it was always my intent to run the scraper then on the download (xlsx/csv) to pick only the 20-30-ish fields I actually need. However the "selected fields" selector does not seem to be aware of the added fields so I am unable to select only those that I need (or I'm not understanding the workings of the "selected fields" option). If this is something I just "have to live with" and download as xml and convert I'm okay with that - just checking back in to get your guidance. Thank you very much for the response and work on this additional functionality.

Yes, the 2000 column limit is unfortunately quite limiting here, because the scraper outputs the data exactly as structured by Zillow, which includes nested fields, arrays and a lot of borderline-useless data, which is easy to filter in automatic JSON processing, but annoying in the Excel exports.

I suppose the Selected Fields input also only knows about the first 2000 columns or something like that. However, if you find the exact name of the fields (like foreclosureAuctionFilingDate), you can write it there manually, click the Create "foreclosure[...]" button and it will be included in the Excel download.

For nested fields, it gets even more complicated after the 2000 columns cutoff. For example, to get foreclosureMoreInfo -> trusteeAddress, you need to manually input both foreclosureMoreInfo and foreclosureMoreInfo/trusteeAddress into the export dialog. This does not apply to fields before the cutoff - you can just include the address field, and it will include all the subfields in the Excel.

So it technically is possible, but I understand that it's quite uncomfortable. I had success with exporting as JSON, where you can simply choose the whole foreclosureMoreInfo field, and then converting via this converter: https://products.aspose.app/cells/conversion/json-to-excel . It even produces a nice structured header (attached screenshot), unlike the Apify export. Hope this helps

- 133 monthly users

- 31 stars

- 98.8% runs succeeded

- 15 days response time

- Created in Jun 2023

- Modified 7 days ago

Maximillian Copelli

Maximillian Copelli